10. Exploratory Data Analysis#

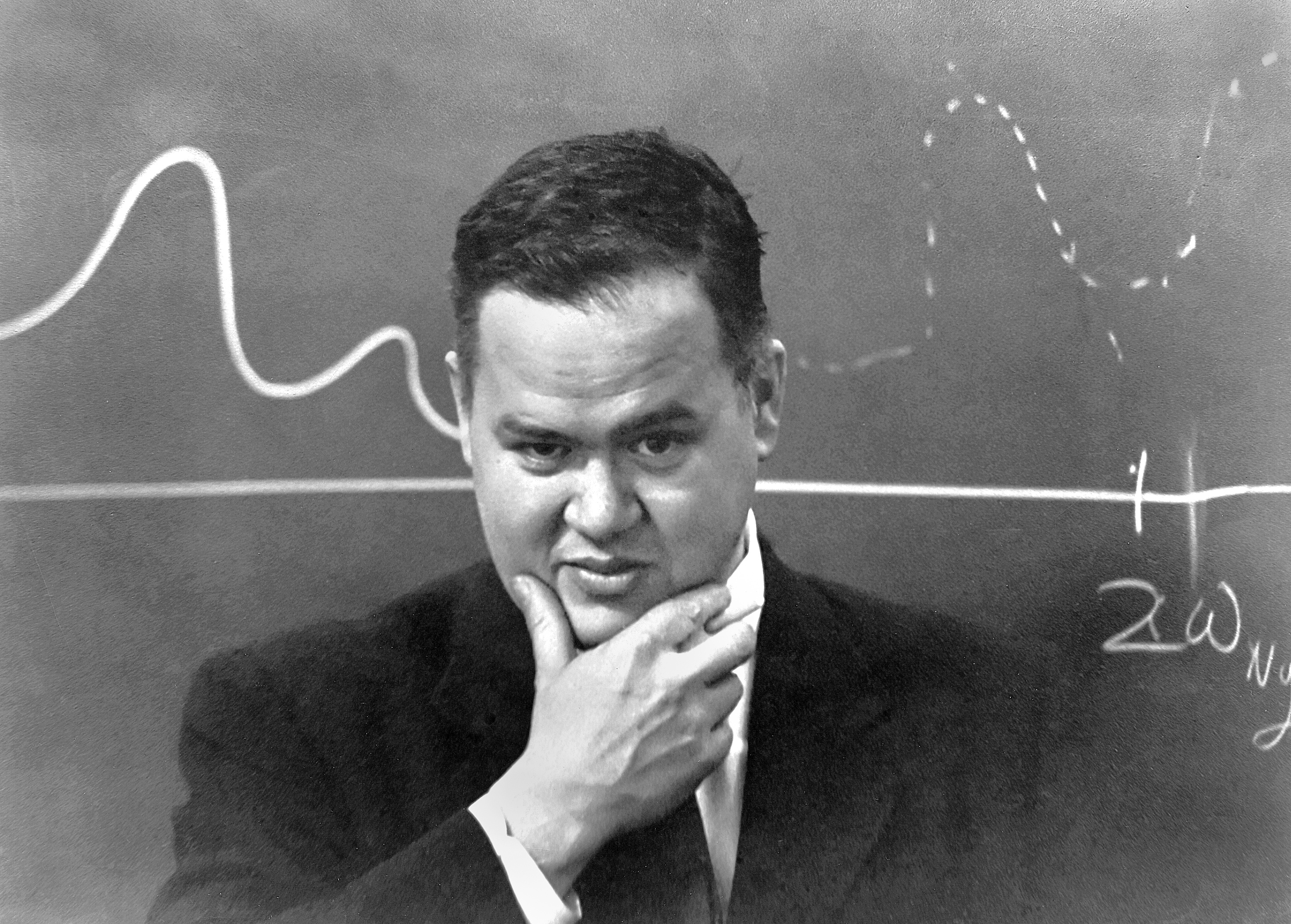

10.1. Introduction: John Tukey’s Definition of Exploratory Data Analysis (EDA)#

John W. Tukey was a prolific statistician and mathematician who coined the word “bit” to represent a unit of digital information, invented fast Fourrier transformations, and was the first person to use the word “software” in a published article. He is considered to be one of the founders of modern data science. One of his most influential contributions is the development of the concept of Exploratory Data Analysis (EDA) in a 1970 book by the same name.

We’ve covered many techniques for acquiring data and loading it as a dataframe in Python. We’ve also covered many techniques for manipulating and cleaning the data. All of that work was to prepare the data to be analyzed. EDA is the first step towards extracting meaning from a prepared dataset: we can understand the properties of indiviual features, and we can describe the relationships between features. We can report these results in tables or in static or interactive visualizations.

The goal of EDA is not to answer a specific research question, but rather to “dig in” to the data to get a better sense of the important properties of the data and to understand the key relationships in the data. EDA includes fast and simple approaches to collecting preliminary findings, for assessing the assumptions that underlie other methods, and for identifying problems and complications such as outliers.

While Tukey coined and popularized the term Exploratory Data Analysis, he defines the practice only loosely. In “We Need Both Exploratory and Confirmatory”, which appeared in The American Statistician in 1980, Tukey explains that EDA is first and foremost “an attitude, [and] … [a] flexibility” (p. 24). He continues:

No catalog of techniques can convey a willingness to look for what can be seen, whether or not anticipated. Yet this is at the heart of exploratory data analysis. The graph paper-and transparencies-are there, not as a technique, but rather as a recognition that the picture-examining eye is the best finder we have of the wholly unanticipated (p. 24).

In other words, EDA is not the kind of statistical work that begins with a specific research question or an unambiguous statement of a null hypothesis. EDA uses tables and graphs as a means for researchers to simply see what’s there. And this kind of exploration can reveal unexpected properties of the data and surprising relationships. Tukey is not saying that that these findings in and of themselves constitute proof. But he does insist that the knowledge we gain from EDA should inform our design of subsequent confirmatory data analysis (CDA). CDA is the kind of statistical work that requires a clear research question and a carefully chosen research design, and EDA can help us to develop that question and design. Tukey concludes that EDA and CDA are both necessary for good science.

EDA can be a very joyful part of a data project. Remember that most data scientists report spending somewhere in the neighborhood of 80% of their time collecting and managing data. EDA is the stage in the data pipeline during which we can, finally, apply the data to gain insight and knowledge. Think about building a car in a garage: we’ve spent so much time collecting parts and putting in the labor to install them all in exactly the right way; EDA is like the moment when we can finally take the car we’ve built out for a ride around the block.

In previous modules, especially modules 2 and 8, we used descriptive statistics and visualizations of a dataframe to identify problems with the way the data are loaded or the way the data are organized. The process of checking data for problems at the loading or manipulation stages is sometimes called initial data analysis (IDA). IDA differs from EDA in that IDA is not concerned with the goals of the research project or the ultimate questions we hope to answer through data analysis. EDA is loosely concerned with the goals of the research project: we want to explore the properties of the features that will be important for confirmatory analyses, but we want to examine the data in a way that allows unexpected and interesting stories to emerge from the data.

There is no fixed set of EDA methods: anything that helps us understand the data prior to a confirmatory model can count as EDA. Very generally, however, EDA methods can be either tabular or graphical. Tabular methods, discussed in this module, involve calculating descriptive statistics like means, variances, and percentiles, creating tables of frequencies or cross-tabulations, and simple hypothesis tests. Graphical methods, discussed in modules 11 and 12, involve data visualizations that can either be static or interactive for web-based presentations.

EDA can also involve measurement models. Sometimes we are interested in combining many features to construct an index of an underlying concept that we think influences the features in our observed data. These measurement models can be thought of as feature engineering, but if we let properties in the data define how the features should be combined, then the weights are important pieces of exploratory evidence that we can use when building confirmatory analysis designs.

10.2. Descriptive Statistics (Example: the 2019 ANES Pilot Study)#

A statistic is a value that summarizes some aspect of how data are distributed. Some statistics are called descriptive to distinguish them from inferential statistics. Descriptive statistics are statements about the sample of data that we are working with, and inferential statistics are statements about the likely values of the same statistic in the population given the sample statistic.

There are a few broad categories of descriptive statistics. Measures of location or central tendency describe the values that typical datapoints take on. These statistics include the mean, a weighted mean, and the median. Measures of variability or dispersion report the typical distance that each datapoint’s value is away from the middle or mean of the distribution and include the variance, standard deviation, and the interquartile range. These measures also can include the minimum and maximum values and various percentiles. Measures of frequency report the count of how many times each distinct value of categorical features appears in the data, or how many values of a continuous feature exist within pre-specified bins. These measures include both raw counts and percentages.

One quick way to display these descriptive statistics is to apply the .describe() method to a dataframe. See modules 2 and 8 for a discussion of .describe() and examples. Here we will cover additional EDA techniques.

To demonstrate the code to generate these descriptive statistics, we will use the American National Election Study’s (ANES) 2019 pilot study. We previously manipulated and cleaned this dataset in modules 8 and 9, so we can use the clean version here. First we import the necessary packages:

import numpy as np

import pandas as pd

import sidetable

import weighted # this is a module of wquantiles, so type pip install wquantiles or conda install wquantiles to get access to it

from scipy import stats

from sklearn import manifold

from sklearn import metrics

from matplotlib import pyplot as plt

import prince

from pandas_profiling import ProfileReport

/var/folders/4w/k9sqqcbx4dxgpjtwgv_1m29h0000gq/T/ipykernel_10398/2001120832.py:10: DeprecationWarning: `import pandas_profiling` is going to be deprecated by April 1st. Please use `import ydata_profiling` instead.

from pandas_profiling import ProfileReport

Then we load the clean data:

anes = pd.read_csv("https://github.com/jkropko/DS-6001/raw/master/localdata/anes_pilot2019_clean.csv")

anes.columns

Index(['caseid', 'liveurban', 'vote16', 'protest', 'vote',

'most_important_issue', 'confecon', 'ideology', 'partyID',

'universal_income', 'family_separation', 'free_college',

'forgive_loans', 'race', 'birthyr', 'sex', 'education', 'weight',

'fttrump', 'ftobama', 'ftbiden', 'ftwarren', 'ftsanders', 'ftbuttigieg',

'ftharris', 'ftblack', 'ftwhite', 'fthisp', 'ftasian', 'ftmuslim',

'ftillegal', 'ftjournal', 'ftnato', 'ftun', 'ftice', 'ftnra', 'ftchina',

'ftnkorea', 'ftmexico', 'ftsaudi', 'ftukraine', 'ftiran', 'ftbritain',

'ftgermany', 'ftjapan', 'ftisrael', 'ftfrance', 'ftcanada', 'ftturkey',

'ftrussia', 'ftpales', 'ftimmig', 'partisanship', 'ftbiden_level',

'age', 'age2', 'ftbiden_float', 'ftbiden_cat', 'ftbiden_str',

'prefersbiden', 'worried_econ', 'favor_both'],

dtype='object')

10.2.1. Measures of Location#

Measures of location describe the value of a “typical” value in a column, although the definition of typical will vary depending on the statistic we employ. Measures of location are also called measures of central tendency.

The simplest descriptive statistic is the mean, which we can calculate with the .mean() method. Here is the average rating from 0 to 100, with 0 meaning highly unfavorable and 100 meaning highly favorable, of Joe Biden across all of the survey’s respondents:

anes.ftbiden.mean()

42.15189466923571

Be aware that .mean() skips over NaN values in the column in calculating the mean. That may seem harmless, but it does make a specific assumption that the missing values are exactly equal to the mean of the column, and if that assumption is highly inaccurate then the calculation of the mean will also be inaccurate. For example, suppose that some people dislike Joe Biden so much that they refuse to answer the question and are coded as NaN. In that case the mean rating for Biden reported above is too high.

One way that a mean can be misleading is that it can be influenced by outliers: values of a column that are orders of magnitude higher or lower than the vast majority of values in the column. For example, out of all the majors at the University of North Carolina at Chapel Hill, the major with the highest average income after graduation is geography, not because most geography majors make more money, but because Michael Jordan was a geography major. Michael Jordan’s enormous income skews the mean upwards.

There are a few different ways to account for outliers in calculating a mean. First, we can calculate a trimmed mean. Given a percentage, a trimmed mean sorts the values of a column and removes the top and bottom percentage from the column. Then it calculates the mean from the remaining values. To calculate a trimmed mean, we can use the stats.trim_mean() function. Here is the mean of Biden’s thermometer ratings with the top and bottom 10% of values removed:

stats.trim_mean(anes.ftbiden, .1)

41.58981444926964

Another way to account for outliers is to calculate the median. The median is the same as the 50th percentile: half of the column’s values exist at or above the median and half exist at or below the median. Medians aren’t sensitive to outliers, like Michael Jordan’s income, because an outlier counts for just one observation regardless of the size of its value, and increasing the size of this value does not change the relative ordering of the rest of the values. The median Biden thermometer rating is:

anes.ftbiden.median()

42.0

Surveys like the ANES, which try to reach a random sample from a population, often find that the samples they end up with are sometimes very different demographically from the population they are trying to study. It is usually the case that some races, genders, educational levels, and socioeconomic statuses are overrepresented relative to others. One way that these surveys attempt to address these sampling biases is to calculate sampling weights that can be used to place greater or lesser emphasis on individual values when calculating statistics like means.

Let’s say, for example, that we draw a sample that contains 60% men and 40% women from a population with 50% men and 50% women. In this case, men are overrepresented in the sample and women are underrepresented. We can approximate a solution to this problem by reweighting each row with a man’s responses as .5/.6 = .833, which means that this row impacts results only 83.3% as much as a regular row. Likewise, we reweight the rows for women by .5/.4 = 1.25.

The ANES contains these sampling weights, although they are stored as strings. I first convert these weights to a numeric data type:

anes.weight = pd.to_numeric(anes.weight.str.strip().replace({'':'1'}))

anes.weight

0 1.347197

1 0.780822

2 0.966367

3 1.103485

4 1.090697

...

3160 1.000000

3161 7.036465

3162 0.892833

3163 1.581613

3164 0.809577

Name: weight, Length: 3165, dtype: float64

We can recalculate the average Biden rating while using these weights to count every row in a way that is more representative of the U.S. adult population. To calculate a weighted average, we can use the np.average() function, passing the weight column to the weights parameter. One issue with np.average() is that, unlike .mean(), is does not skip missing values: it replaces the result with NaN if there is even one missing value in the column. So to skip over missing values, we create a second version of the dataframe that removes rows for which ftbiden is missing. The weighted average of Biden’s thermometer ratings is:

anes_temp = anes.loc[~anes.ftbiden.isna()]

np.average(anes_temp['ftbiden'], weights=anes_temp.weight)

43.31193635270897

To calculate a weighted median, we use the weighted.median() function from the wquantiles package. The first argument is the feature for which we want to calculate the median, and the second argument is the feature that contains the weights:

weighted.median(anes.ftbiden, anes.weight)

47.0

10.3. Measures of Variability#

Measures of variability, also called measures of dispersion, report on how far from the mean the “typical” value in a column happens to be. The two most common measures of variability are the variance and the standard deviation, which we can calculate with the .var() and the .std() methods, respectively:

[anes.ftbiden.var(), anes.ftbiden.std()]

[1118.0106501193227, 33.436666253071984]

Like .mean() and .median(), these methods deal with missing values by skipping over them. Please keep in mind that ths behavior, while convenient, can generate highly inaccurate descriptive statistics if the missing values are very different from the mean of the column.

These statistics can be very abstract and hard to conceptualize, especially for students without a great deal of experience in statistics. One way I like to think about the standard deviation is with a map. Consider the state of Pennsylvania:

Pennsylvania has two big cities: Pittsburgh in the west and Philadelphia in the east. Suppose we want to know where in the state a “typical” resident lives, so we average the latitudinal and longitudinal coordinates of every resident’s location. The average will be somewhere in central PA, possibly near Harrisburg as Philadelphia is larger than Pittsburgh. The standard deviation is the average of each residents’ distance from the mean location near Harrisburg. In this case, the standard deviation is much higher because relatively fewer people live in or near Harrisburg, and more people live farther away.

We can see the minimum and maximum values of a column with the .min() and .max() methods. The minimum and maximum values of the Biden thermometer, unsurprisingly, are 0 and 100:

[anes.ftbiden.min(), anes.ftbiden.max()]

[0.0, 100.0]

Another way to see the variability of a column is to calculate percentiles for the column. Percentiles are called quantiles when we use proportions instead of percents, so the 60th percentile is equal to the .6 quantile. We can use the .quantile() method to calculate quantiles, passing a list of numbers for the quantiles we want to see. Here are the 10th, 25th, 33rd, 67th, 75th, and 90th percentiles for the Biden thermometer, along with the minimum, maximum, and median which are the 0th, 50th, and 100th percentiles:

anes.ftbiden.quantile([0, .1, .25, .33, .5, .67, .75, .9, 1])

0.00 0.0

0.10 1.0

0.25 7.0

0.33 16.0

0.50 42.0

0.67 60.0

0.75 70.0

0.90 90.0

1.00 100.0

Name: ftbiden, dtype: float64

A percentile is interpreted as the value in the column for which the specified percent of values are below that value. The 33rd percentile of Biden’s thermometer is 16, which means that 33% of the respondents in the ANES data rate Biden at or below 16. Percentiles can show us situations in which a column has a high degree of variability: the smaller the low percentiles are and the bigger the high percentiles are, the most variance exists in the column.

A simple way to understand the distance between high and low percentiles is to calculate the interquartile range (IQR), which is simply the difference between the 75th and 25th percentiles:

[anes.ftbiden.quantile(0.75),

anes.ftbiden.quantile(0.25),

anes.ftbiden.quantile(0.75) - anes.ftbiden.quantile(0.25)]

[70.0, 7.0, 63.0]

10.3.1. Measures of Frequency#

Categorical features can be either ordered, meaning that the categories can be placed in a natural order, or unordered. If the categories are ordered, then we have two ways to calculate descriptive statistics. We can either convert the values to numeric data types and calculate the mean, median, and other statistics like we did above, or we can ignore the ordering of the categories and report the frequency of each category. If a feature’s categories cannot be placed in a natural order, then we can only use frequencies to describe the feature.

There are several ways to replace the categories of an ordered categorical feature with integers. The most straightforward way to convert this kind of column to numeric class is to use the .map() method to map numbers onto the categories they belong with. For example, we can map support for universal basic income to integers from 1 to 7, where higher numbers indicate more support:

anes['ui_num'] = anes.universal_income.map({'Oppose a great deal':1,

'Oppose a moderate amount':2,

'Oppose a little':3,

'Neither favor nor oppose':4,

'Favor a little':5,

'Favor a moderate amount':6,

'Favor a great deal':7})

anes.ui_num

0 6

1 2

2 4

3 4

4 1

..

3160 1

3161 7

3162 1

3163 6

3164 1

Name: ui_num, Length: 3165, dtype: int64

The mean, median, standard deviation, and interquartile range of support for universal basic income on a 1-7 scale, with higher numbers indicating more favorability, are:

[anes.ui_num.mean(),

anes.ui_num.median(),

anes.ui_num.std(),

anes.ui_num.quantile(.75)-anes.ui_num.quantile(.25)]

[3.51911532385466, 4.0, 2.1342500409577876, 4.0]

To see the frequency of each category, we can use the value_counts() method. Here are the categories for universal_income:

anes.universal_income.value_counts()

universal_income

Oppose a great deal 1007

Neither favor nor oppose 704

Favor a great deal 377

Favor a little 349

Favor a moderate amount 321

Oppose a moderate amount 216

Oppose a little 191

Name: count, dtype: int64

The .stb.freq method from the sidetable package provides a dataframe presentation of the same information and includes percentages:

anes.stb.freq(['universal_income'])

| universal_income | count | percent | cumulative_count | cumulative_percent | |

|---|---|---|---|---|---|

| 0 | Oppose a great deal | 1007 | 31.816746 | 1007 | 31.816746 |

| 1 | Neither favor nor oppose | 704 | 22.243286 | 1711 | 54.060032 |

| 2 | Favor a great deal | 377 | 11.911532 | 2088 | 65.971564 |

| 3 | Favor a little | 349 | 11.026856 | 2437 | 76.998420 |

| 4 | Favor a moderate amount | 321 | 10.142180 | 2758 | 87.140600 |

| 5 | Oppose a moderate amount | 216 | 6.824645 | 2974 | 93.965245 |

| 6 | Oppose a little | 191 | 6.034755 | 3165 | 100.000000 |

To calculate weighted frequencies, we use .groupby(): first we calculate the sum of weights within each category of the categorical feature, then we divide by the sum of weights across the entire dataframe. The resulting frequencies are the accurate ones given the sampling weights to make the sample more representative of the population. The weighted percents for universal_income are:

pd.Series(anes[['universal_income','weight']].groupby('universal_income').sum()['weight'])/anes['weight'].sum()

universal_income

Favor a great deal 0.124589

Favor a little 0.113442

Favor a moderate amount 0.104484

Neither favor nor oppose 0.225966

Oppose a great deal 0.302949

Oppose a little 0.060453

Oppose a moderate amount 0.068117

Name: weight, dtype: float64

A continuous-valued numeric feature can be turned into a categorical feature with the pd.cut() function, which takes two arguments: first, the column that we want to cut into categories, and second either the number of equally sized bins or a list of the cutpoints for unequaly sized bins. To create 10 equally spaced bins for the Biden thermometer, we type:

binnedbiden = pd.cut(anes['ftbiden'], 10)

binnedbiden.value_counts()

ftbiden

(-0.1, 10.0] 909

(90.0, 100.0] 308

(40.0, 50.0] 293

(50.0, 60.0] 292

(60.0, 70.0] 253

(80.0, 90.0] 252

(10.0, 20.0] 234

(70.0, 80.0] 205

(30.0, 40.0] 192

(20.0, 30.0] 176

Name: count, dtype: int64

The intervals by default include the upper-bound but do not include the lower-bound. To specify the cutpoints, we start with a number slightly lower than the minimum value so that the minimum is included in the lowest bin. To create our own unequally spaced bins for the Biden thermometer, we type:

binnedbiden = pd.cut(anes['ftbiden'], [-.1, 0, 10, 30, 50, 70, 99, 100])

binnedbiden.value_counts()

ftbiden

(70.0, 99.0] 670

(0.0, 10.0] 644

(50.0, 70.0] 545

(30.0, 50.0] 485

(10.0, 30.0] 410

(-0.1, 0.0] 265

(99.0, 100.0] 95

Name: count, dtype: int64

10.3.2. Showing all Descriptive Statistics At Once#

The pandas_profiling package includes an incredible tool for EDA. The ProfileReport() calculates all of the descriptive statistics discussed above for every feature in the data and organizes this information in a well-designed HTML format that can be embedded directly into a Jupyter notebook.

There are two versions of ProfileReport(). With minimal=True, the function generates a report that tells us the dimensions of the dataframe, as well as the amount of missingness and duplicate rows, and the amount of memory the dataframe takes up. In addition, the report includes the following information for each feature: the number of distinct values (and the percent of values that are unique) for each feature (here called variables), as well as the number of (and percent of) missing, infinite, and zero values, the mean, the minimum and maximum, and the amount of memory the feature takes up, and displays a histogram or bar chart. Finally, the report also generates warnings to help us identify important properties of the data, such as

which columns have missing values,

which columns are unique, meaning that no two rows share the same value,

and which columns have a high degree of cardinality, meaning that most (but not all) of the values in the column are unique.

Depending on the size of the dataframe, the full report with minimal=True may take a few minutes to load into your notebook. The report is displayed below. Please take some time now to scroll through the report and familiarize yourself with the information it contains:

profile = ProfileReport(anes,

title='Pandas Profiling Report',

html={'style':{'full_width':True}},

minimal=True)

profile.to_notebook_iframe()

If we set minimal=False, then the report will take quite a bit longer to compile. But once it does, the report will contain all of the information we see with minimal=True, as well as additional visualizations that help us see potential interactions in the data, understand correlations between features and missingness patterns, and show us the head and tail of the dataframe:

profile = ProfileReport(anes,

title='Pandas Profiling Report',

html={'style':{'full_width':True}},

minimal=False)

profile.to_notebook_iframe()

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Cell In[21], line 5

1 profile = ProfileReport(anes,

2 title='Pandas Profiling Report',

3 html={'style':{'full_width':True}},

4 minimal=False)

----> 5 profile.to_notebook_iframe()

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:526, in ProfileReport.to_notebook_iframe(self)

524 with warnings.catch_warnings():

525 warnings.simplefilter("ignore")

--> 526 display(get_notebook_iframe(self.config, self))

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/report/presentation/flavours/widget/notebook.py:75, in get_notebook_iframe(config, profile)

73 output = get_notebook_iframe_src(config, profile)

74 elif attribute == IframeAttribute.srcdoc:

---> 75 output = get_notebook_iframe_srcdoc(config, profile)

76 else:

77 raise ValueError(

78 f'Iframe Attribute can be "src" or "srcdoc" (current: {attribute}).'

79 )

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/report/presentation/flavours/widget/notebook.py:29, in get_notebook_iframe_srcdoc(config, profile)

27 width = config.notebook.iframe.width

28 height = config.notebook.iframe.height

---> 29 src = html.escape(profile.to_html())

31 iframe = f'<iframe width="{width}" height="{height}" srcdoc="{src}" frameborder="0" allowfullscreen></iframe>'

33 return HTML(iframe)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:496, in ProfileReport.to_html(self)

488 def to_html(self) -> str:

489 """Generate and return complete template as lengthy string

490 for using with frameworks.

491

(...)

494

495 """

--> 496 return self.html

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:292, in ProfileReport.html(self)

289 @property

290 def html(self) -> str:

291 if self._html is None:

--> 292 self._html = self._render_html()

293 return self._html

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:409, in ProfileReport._render_html(self)

406 def _render_html(self) -> str:

407 from ydata_profiling.report.presentation.flavours import HTMLReport

--> 409 report = self.report

411 with tqdm(

412 total=1, desc="Render HTML", disable=not self.config.progress_bar

413 ) as pbar:

414 html = HTMLReport(copy.deepcopy(report)).render(

415 nav=self.config.html.navbar_show,

416 offline=self.config.html.use_local_assets,

(...)

424 version=self.description_set.package["ydata_profiling_version"],

425 )

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:286, in ProfileReport.report(self)

283 @property

284 def report(self) -> Root:

285 if self._report is None:

--> 286 self._report = get_report_structure(self.config, self.description_set)

287 return self._report

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/profile_report.py:268, in ProfileReport.description_set(self)

265 @property

266 def description_set(self) -> BaseDescription:

267 if self._description_set is None:

--> 268 self._description_set = describe_df(

269 self.config,

270 self.df,

271 self.summarizer,

272 self.typeset,

273 self._sample,

274 )

275 return self._description_set

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/model/describe.py:130, in describe(config, df, summarizer, typeset, sample)

126 scatter_matrix: Dict[Any, Dict[Any, Any]] = {

127 x: {y: None} for x, y in scatter_tasks

128 }

129 for x, y in scatter_tasks:

--> 130 scatter_matrix[x][y] = progress(

131 get_scatter_plot, pbar, f"scatter {x}, {y}"

132 )(config, df, x, y, interval_columns)

134 # missing diagrams

135 missing_map = get_missing_active(config, table_stats)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/utils/progress_bar.py:11, in progress.<locals>.inner(*args, **kwargs)

8 @wraps(fn)

9 def inner(*args, **kwargs) -> Any:

10 bar.set_postfix_str(message)

---> 11 ret = fn(*args, **kwargs)

12 bar.update()

13 return ret

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/model/pairwise.py:31, in get_scatter_plot(config, df, x, y, continuous_variables)

29 else:

30 df_temp = df[[x, y]].dropna()

---> 31 return scatter_pairwise(config, df_temp[x], df_temp[y], x, y)

32 else:

33 return ""

File ~/.pyenv/versions/3.12.5/lib/python3.12/contextlib.py:81, in ContextDecorator.__call__.<locals>.inner(*args, **kwds)

78 @wraps(func)

79 def inner(*args, **kwds):

80 with self._recreate_cm():

---> 81 return func(*args, **kwds)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/visualisation/plot.py:374, in scatter_pairwise(config, series1, series2, x_label, y_label)

372 else:

373 plt.scatter(series1[indices], series2[indices], color=color)

--> 374 return plot_360_n0sc0pe(config)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/ydata_profiling/visualisation/utils.py:73, in plot_360_n0sc0pe(config, image_format, bbox_extra_artists, bbox_inches)

70 if image_format == "svg":

71 image_str = StringIO()

---> 73 plt.savefig(

74 image_str,

75 format=image_format,

76 bbox_extra_artists=bbox_extra_artists,

77 bbox_inches=bbox_inches,

78 )

79 plt.close()

80 result_string = image_str.getvalue()

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/pyplot.py:1228, in savefig(*args, **kwargs)

1225 fig = gcf()

1226 # savefig default implementation has no return, so mypy is unhappy

1227 # presumably this is here because subclasses can return?

-> 1228 res = fig.savefig(*args, **kwargs) # type: ignore[func-returns-value]

1229 fig.canvas.draw_idle() # Need this if 'transparent=True', to reset colors.

1230 return res

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/figure.py:3395, in Figure.savefig(self, fname, transparent, **kwargs)

3393 for ax in self.axes:

3394 _recursively_make_axes_transparent(stack, ax)

-> 3395 self.canvas.print_figure(fname, **kwargs)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/backend_bases.py:2204, in FigureCanvasBase.print_figure(self, filename, dpi, facecolor, edgecolor, orientation, format, bbox_inches, pad_inches, bbox_extra_artists, backend, **kwargs)

2200 try:

2201 # _get_renderer may change the figure dpi (as vector formats

2202 # force the figure dpi to 72), so we need to set it again here.

2203 with cbook._setattr_cm(self.figure, dpi=dpi):

-> 2204 result = print_method(

2205 filename,

2206 facecolor=facecolor,

2207 edgecolor=edgecolor,

2208 orientation=orientation,

2209 bbox_inches_restore=_bbox_inches_restore,

2210 **kwargs)

2211 finally:

2212 if bbox_inches and restore_bbox:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/backend_bases.py:2054, in FigureCanvasBase._switch_canvas_and_return_print_method.<locals>.<lambda>(*args, **kwargs)

2050 optional_kws = { # Passed by print_figure for other renderers.

2051 "dpi", "facecolor", "edgecolor", "orientation",

2052 "bbox_inches_restore"}

2053 skip = optional_kws - {*inspect.signature(meth).parameters}

-> 2054 print_method = functools.wraps(meth)(lambda *args, **kwargs: meth(

2055 *args, **{k: v for k, v in kwargs.items() if k not in skip}))

2056 else: # Let third-parties do as they see fit.

2057 print_method = meth

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/backends/backend_svg.py:1339, in FigureCanvasSVG.print_svg(self, filename, bbox_inches_restore, metadata)

1334 w, h = width * 72, height * 72

1335 renderer = MixedModeRenderer(

1336 self.figure, width, height, dpi,

1337 RendererSVG(w, h, fh, image_dpi=dpi, metadata=metadata),

1338 bbox_inches_restore=bbox_inches_restore)

-> 1339 self.figure.draw(renderer)

1340 renderer.finalize()

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/artist.py:95, in _finalize_rasterization.<locals>.draw_wrapper(artist, renderer, *args, **kwargs)

93 @wraps(draw)

94 def draw_wrapper(artist, renderer, *args, **kwargs):

---> 95 result = draw(artist, renderer, *args, **kwargs)

96 if renderer._rasterizing:

97 renderer.stop_rasterizing()

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/artist.py:72, in allow_rasterization.<locals>.draw_wrapper(artist, renderer)

69 if artist.get_agg_filter() is not None:

70 renderer.start_filter()

---> 72 return draw(artist, renderer)

73 finally:

74 if artist.get_agg_filter() is not None:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/figure.py:3162, in Figure.draw(self, renderer)

3159 # ValueError can occur when resizing a window.

3161 self.patch.draw(renderer)

-> 3162 mimage._draw_list_compositing_images(

3163 renderer, self, artists, self.suppressComposite)

3165 renderer.close_group('figure')

3166 finally:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/image.py:132, in _draw_list_compositing_images(renderer, parent, artists, suppress_composite)

130 if not_composite or not has_images:

131 for a in artists:

--> 132 a.draw(renderer)

133 else:

134 # Composite any adjacent images together

135 image_group = []

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/artist.py:72, in allow_rasterization.<locals>.draw_wrapper(artist, renderer)

69 if artist.get_agg_filter() is not None:

70 renderer.start_filter()

---> 72 return draw(artist, renderer)

73 finally:

74 if artist.get_agg_filter() is not None:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/axes/_base.py:3137, in _AxesBase.draw(self, renderer)

3134 if artists_rasterized:

3135 _draw_rasterized(self.figure, artists_rasterized, renderer)

-> 3137 mimage._draw_list_compositing_images(

3138 renderer, self, artists, self.figure.suppressComposite)

3140 renderer.close_group('axes')

3141 self.stale = False

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/image.py:132, in _draw_list_compositing_images(renderer, parent, artists, suppress_composite)

130 if not_composite or not has_images:

131 for a in artists:

--> 132 a.draw(renderer)

133 else:

134 # Composite any adjacent images together

135 image_group = []

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/artist.py:72, in allow_rasterization.<locals>.draw_wrapper(artist, renderer)

69 if artist.get_agg_filter() is not None:

70 renderer.start_filter()

---> 72 return draw(artist, renderer)

73 finally:

74 if artist.get_agg_filter() is not None:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/collections.py:1005, in _CollectionWithSizes.draw(self, renderer)

1002 @artist.allow_rasterization

1003 def draw(self, renderer):

1004 self.set_sizes(self._sizes, self.figure.dpi)

-> 1005 super().draw(renderer)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/artist.py:72, in allow_rasterization.<locals>.draw_wrapper(artist, renderer)

69 if artist.get_agg_filter() is not None:

70 renderer.start_filter()

---> 72 return draw(artist, renderer)

73 finally:

74 if artist.get_agg_filter() is not None:

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/collections.py:426, in Collection.draw(self, renderer)

417 ipaths, ilinestyles = self._get_inverse_paths_linestyles()

418 renderer.draw_path_collection(

419 gc, transform.frozen(), ipaths,

420 self.get_transforms(), offsets, offset_trf,

(...)

423 self._antialiaseds, self._urls,

424 "screen")

--> 426 renderer.draw_path_collection(

427 gc, transform.frozen(), paths,

428 self.get_transforms(), offsets, offset_trf,

429 self.get_facecolor(), self.get_edgecolor(),

430 self._linewidths, self._linestyles,

431 self._antialiaseds, self._urls,

432 "screen") # offset_position, kept for backcompat.

434 gc.restore()

435 renderer.close_group(self.__class__.__name__)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/backends/backend_svg.py:756, in RendererSVG.draw_path_collection(self, gc, master_transform, paths, all_transforms, offsets, offset_trans, facecolors, edgecolors, linewidths, linestyles, antialiaseds, urls, offset_position)

754 if url is not None:

755 writer.start('a', attrib={'xlink:href': url})

--> 756 clip_attrs = self._get_clip_attrs(gc0)

757 if clip_attrs:

758 writer.start('g', **clip_attrs)

File ~/.pyenv/versions/3.12.5/lib/python3.12/site-packages/matplotlib/backends/backend_svg.py:600, in RendererSVG._get_clip_attrs(self, gc)

598 else:

599 return {}

--> 600 clip = self._clipd.get(dictkey)

601 if clip is None:

602 oid = self._make_id('p', dictkey)

KeyboardInterrupt:

10.4. Ways to Understand Relationships#

Descriptive statistics are ways to understand characteristics of particular, individual features in the data. But there are different methods to understand the relationship between two features, the choice of which depends on whether the relationship is between two continuous features, between two categorical features, or between one continuous and one categorical feature.

While these methods describe correlations, they do not demonstrate that one feature is a cause for the other. There are many additional criteria we have to take into consideration if we are trying to establish causal inference. That said, these methods work well for EDA as they can reveal meaningful patters that exist in the data.

10.4.1. Correlations#

Relationships between two continuous features can be quantified with a correlation: a number that can be positive, negative, or zero. Positive numbers indicate a positive relationship: the two features tend to increase together or decrease together. Negative numbers indicate a negative relationship: increases in one feature tend to occur with decreases in the other. Zero indicates that there is no discernible relationship. The most common correlation is Pearson’s correlation coefficient, which can take on any real value between -1 and 1. There are other correlation measures, including Spearman’s rank correlation coefficient, Kendall’s tau, and intraclass correlation. There are subtle differences between these measures, but here we will focus on Pearson’s coefficient.

To calculate the correlation between two features, isolate the two columns and apply the .corr() method. Here we can measure the correlation between Biden and Trump’s thermometer ratings:

anes[['fttrump', 'ftbiden']].corr()

| fttrump | ftbiden | |

|---|---|---|

| fttrump | 1.000000 | -0.646357 |

| ftbiden | -0.646357 | 1.000000 |

The .corr() method produces a symmetric matrix with 1s on the diagonal (indicating that each feature is perfectly correlated with itself), and the correlation between the two features in the off-diagonal elements. In this case, the correlation is negative, meaning that the more highly a person rates Biden, the lower they rate Trump, and vice versa.

To display the correlations for more than two features, pass more columns to the .corr() method. If we use .loc to select all of the feeling thermometer features, then .corr() places all the correlations in a symmetric matrix as follows:

anes.loc[:,'fttrump':'ftimmig'].corr()

| fttrump | ftobama | ftbiden | ftwarren | ftsanders | ftbuttigieg | ftharris | ftblack | ftwhite | fthisp | ... | ftbritain | ftgermany | ftjapan | ftisrael | ftfrance | ftcanada | ftturkey | ftrussia | ftpales | ftimmig | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| fttrump | 1.000000 | -0.754178 | -0.646357 | -0.699664 | -0.678443 | -0.588964 | -0.616295 | -0.088143 | 0.240802 | -0.128286 | ... | -0.021959 | -0.266252 | -0.032216 | 0.431513 | -0.363577 | -0.273404 | -0.044590 | 0.242000 | -0.378096 | -0.088958 |

| ftobama | -0.754178 | 1.000000 | 0.805100 | 0.783064 | 0.720092 | 0.714641 | 0.743463 | 0.222713 | -0.096177 | 0.223919 | ... | 0.139026 | 0.329477 | 0.106416 | -0.292210 | 0.448712 | 0.342440 | 0.187500 | -0.117613 | 0.430379 | 0.177041 |

| ftbiden | -0.646357 | 0.805100 | 1.000000 | 0.733601 | 0.664075 | 0.728557 | 0.730069 | 0.171006 | -0.028490 | 0.171773 | ... | 0.181856 | 0.324174 | 0.104006 | -0.210108 | 0.433085 | 0.346677 | 0.201423 | -0.093490 | 0.406408 | 0.136857 |

| ftwarren | -0.699664 | 0.783064 | 0.733601 | 1.000000 | 0.798636 | 0.706680 | 0.799202 | 0.224285 | -0.086150 | 0.241548 | ... | 0.142118 | 0.362469 | 0.133061 | -0.274250 | 0.462700 | 0.352000 | 0.199888 | -0.095917 | 0.503494 | 0.185739 |

| ftsanders | -0.678443 | 0.720092 | 0.664075 | 0.798636 | 1.000000 | 0.612547 | 0.695092 | 0.178376 | -0.097548 | 0.203581 | ... | 0.105691 | 0.317619 | 0.117220 | -0.301007 | 0.423979 | 0.307537 | 0.222943 | -0.020067 | 0.465069 | 0.133447 |

| ftbuttigieg | -0.588964 | 0.714641 | 0.728557 | 0.706680 | 0.612547 | 1.000000 | 0.736301 | 0.161853 | -0.022885 | 0.183849 | ... | 0.216310 | 0.413393 | 0.166988 | -0.188748 | 0.471103 | 0.365010 | 0.211182 | -0.051666 | 0.463869 | 0.169029 |

| ftharris | -0.616295 | 0.743463 | 0.730069 | 0.799202 | 0.695092 | 0.736301 | 1.000000 | 0.215413 | -0.074303 | 0.222411 | ... | 0.147331 | 0.360846 | 0.138585 | -0.218450 | 0.450358 | 0.322747 | 0.233789 | -0.032494 | 0.486032 | 0.165644 |

| ftblack | -0.088143 | 0.222713 | 0.171006 | 0.224285 | 0.178376 | 0.161853 | 0.215413 | 1.000000 | 0.434286 | 0.723005 | ... | 0.331453 | 0.289326 | 0.347317 | 0.257312 | 0.315790 | 0.307676 | 0.180295 | 0.042982 | 0.252483 | 0.537916 |

| ftwhite | 0.240802 | -0.096177 | -0.028490 | -0.086150 | -0.097548 | -0.022885 | -0.074303 | 0.434286 | 1.000000 | 0.416440 | ... | 0.347429 | 0.236740 | 0.298695 | 0.346157 | 0.184578 | 0.253947 | 0.121547 | 0.149674 | 0.038458 | 0.309098 |

| fthisp | -0.128286 | 0.223919 | 0.171773 | 0.241548 | 0.203581 | 0.183849 | 0.222411 | 0.723005 | 0.416440 | 1.000000 | ... | 0.380352 | 0.363587 | 0.422253 | 0.258848 | 0.372019 | 0.385854 | 0.217107 | 0.089296 | 0.285451 | 0.645328 |

| ftasian | -0.031700 | 0.123806 | 0.093182 | 0.151839 | 0.120267 | 0.129914 | 0.126617 | 0.655427 | 0.528256 | 0.735956 | ... | 0.434166 | 0.391136 | 0.528283 | 0.304042 | 0.368933 | 0.409607 | 0.181775 | 0.105242 | 0.228492 | 0.617315 |

| ftmuslim | -0.458024 | 0.550868 | 0.463104 | 0.534428 | 0.485082 | 0.494359 | 0.510411 | 0.504421 | 0.144579 | 0.540324 | ... | 0.281924 | 0.452412 | 0.335979 | -0.025442 | 0.514798 | 0.383945 | 0.355234 | 0.074838 | 0.552143 | 0.455185 |

| ftillegal | -0.610016 | 0.644172 | 0.562951 | 0.675027 | 0.625150 | 0.567295 | 0.636316 | 0.358561 | -0.005061 | 0.458139 | ... | 0.176073 | 0.386416 | 0.212992 | -0.149955 | 0.481244 | 0.333786 | 0.285270 | 0.032984 | 0.564615 | 0.410194 |

| ftjournal | -0.643103 | 0.703190 | 0.654196 | 0.718289 | 0.644384 | 0.662666 | 0.668522 | 0.283701 | 0.021498 | 0.298506 | ... | 0.243825 | 0.458552 | 0.207698 | -0.195499 | 0.559372 | 0.427888 | 0.229343 | -0.044028 | 0.530086 | 0.291537 |

| ftnato | -0.381275 | 0.503913 | 0.521605 | 0.509323 | 0.403909 | 0.546362 | 0.505836 | 0.256728 | 0.133711 | 0.316018 | ... | 0.443815 | 0.529557 | 0.363440 | 0.029768 | 0.577222 | 0.522662 | 0.229762 | -0.049538 | 0.421653 | 0.323695 |

| ftun | -0.576975 | 0.670916 | 0.636527 | 0.684970 | 0.608613 | 0.647596 | 0.650612 | 0.233984 | 0.036300 | 0.268326 | ... | 0.294610 | 0.488714 | 0.210968 | -0.182560 | 0.606093 | 0.472038 | 0.293191 | -0.007171 | 0.544242 | 0.227354 |

| ftice | 0.712355 | -0.541225 | -0.411359 | -0.535019 | -0.534303 | -0.398386 | -0.452134 | 0.030010 | 0.331804 | -0.024752 | ... | 0.123087 | -0.116552 | 0.123338 | 0.491431 | -0.206956 | -0.132086 | 0.056048 | 0.256069 | -0.272755 | 0.022379 |

| ftnra | 0.769239 | -0.620586 | -0.514269 | -0.578689 | -0.543851 | -0.495196 | -0.497697 | -0.013278 | 0.284510 | -0.040818 | ... | 0.011210 | -0.201393 | 0.011289 | 0.453555 | -0.282935 | -0.205151 | 0.059867 | 0.328068 | -0.300742 | -0.024141 |

| ftchina | -0.156507 | 0.294199 | 0.304008 | 0.284309 | 0.312940 | 0.303869 | 0.334583 | 0.222639 | 0.113381 | 0.255689 | ... | 0.229706 | 0.359880 | 0.282626 | 0.127701 | 0.425073 | 0.276776 | 0.551124 | 0.494781 | 0.477169 | 0.195152 |

| ftnkorea | 0.033095 | 0.120236 | 0.144896 | 0.128233 | 0.174343 | 0.132347 | 0.176920 | 0.082878 | 0.021354 | 0.101170 | ... | 0.025271 | 0.123782 | 0.078506 | 0.082840 | 0.169177 | 0.002019 | 0.526951 | 0.624119 | 0.401922 | 0.047249 |

| ftmexico | -0.334007 | 0.426059 | 0.388208 | 0.440717 | 0.401885 | 0.403438 | 0.438171 | 0.395442 | 0.145634 | 0.530952 | ... | 0.420791 | 0.537068 | 0.451828 | 0.108958 | 0.569195 | 0.507388 | 0.379229 | 0.174035 | 0.518893 | 0.439930 |

| ftsaudi | 0.099949 | 0.081651 | 0.117200 | 0.054736 | 0.079993 | 0.128960 | 0.130886 | 0.145856 | 0.115528 | 0.169927 | ... | 0.194300 | 0.217031 | 0.224244 | 0.298359 | 0.256665 | 0.130226 | 0.626915 | 0.546692 | 0.366683 | 0.155540 |

| ftukraine | -0.132507 | 0.224765 | 0.230221 | 0.263743 | 0.205875 | 0.317908 | 0.278822 | 0.278426 | 0.176430 | 0.350238 | ... | 0.449295 | 0.527920 | 0.495899 | 0.238282 | 0.476248 | 0.421461 | 0.379648 | 0.301563 | 0.461101 | 0.374548 |

| ftiran | -0.269987 | 0.362375 | 0.320535 | 0.405235 | 0.416376 | 0.359895 | 0.414169 | 0.171180 | -0.016904 | 0.189045 | ... | 0.102198 | 0.299930 | 0.178537 | -0.034047 | 0.367061 | 0.167361 | 0.543095 | 0.435782 | 0.618094 | 0.148806 |

| ftbritain | -0.021959 | 0.139026 | 0.181856 | 0.142118 | 0.105691 | 0.216310 | 0.147331 | 0.331453 | 0.347429 | 0.380352 | ... | 1.000000 | 0.587212 | 0.586632 | 0.377525 | 0.571518 | 0.643862 | 0.235504 | 0.104608 | 0.251471 | 0.420729 |

| ftgermany | -0.266252 | 0.329477 | 0.324174 | 0.362469 | 0.317619 | 0.413393 | 0.360846 | 0.289326 | 0.236740 | 0.363587 | ... | 0.587212 | 1.000000 | 0.564738 | 0.163192 | 0.682250 | 0.650774 | 0.326201 | 0.158495 | 0.461673 | 0.380208 |

| ftjapan | -0.032216 | 0.106416 | 0.104006 | 0.133061 | 0.117220 | 0.166988 | 0.138585 | 0.347317 | 0.298695 | 0.422253 | ... | 0.586632 | 0.564738 | 1.000000 | 0.354172 | 0.481355 | 0.508379 | 0.250330 | 0.176217 | 0.299884 | 0.455836 |

| ftisrael | 0.431513 | -0.292210 | -0.210108 | -0.274250 | -0.301007 | -0.188748 | -0.218450 | 0.257312 | 0.346157 | 0.258848 | ... | 0.377525 | 0.163192 | 0.354172 | 1.000000 | 0.109896 | 0.150461 | 0.204273 | 0.216547 | -0.032737 | 0.293822 |

| ftfrance | -0.363577 | 0.448712 | 0.433085 | 0.462700 | 0.423979 | 0.471103 | 0.450358 | 0.315790 | 0.184578 | 0.372019 | ... | 0.571518 | 0.682250 | 0.481355 | 0.109896 | 1.000000 | 0.649580 | 0.377959 | 0.120297 | 0.511657 | 0.360284 |

| ftcanada | -0.273404 | 0.342440 | 0.346677 | 0.352000 | 0.307537 | 0.365010 | 0.322747 | 0.307676 | 0.253947 | 0.385854 | ... | 0.643862 | 0.650774 | 0.508379 | 0.150461 | 0.649580 | 1.000000 | 0.223369 | 0.021592 | 0.340197 | 0.401706 |

| ftturkey | -0.044590 | 0.187500 | 0.201423 | 0.199888 | 0.222943 | 0.211182 | 0.233789 | 0.180295 | 0.121547 | 0.217107 | ... | 0.235504 | 0.326201 | 0.250330 | 0.204273 | 0.377959 | 0.223369 | 1.000000 | 0.498978 | 0.467913 | 0.204421 |

| ftrussia | 0.242000 | -0.117613 | -0.093490 | -0.095917 | -0.020067 | -0.051666 | -0.032494 | 0.042982 | 0.149674 | 0.089296 | ... | 0.104608 | 0.158495 | 0.176217 | 0.216547 | 0.120297 | 0.021592 | 0.498978 | 1.000000 | 0.251593 | 0.070771 |

| ftpales | -0.378096 | 0.430379 | 0.406408 | 0.503494 | 0.465069 | 0.463869 | 0.486032 | 0.252483 | 0.038458 | 0.285451 | ... | 0.251471 | 0.461673 | 0.299884 | -0.032737 | 0.511657 | 0.340197 | 0.467913 | 0.251593 | 1.000000 | 0.268191 |

| ftimmig | -0.088958 | 0.177041 | 0.136857 | 0.185739 | 0.133447 | 0.169029 | 0.165644 | 0.537916 | 0.309098 | 0.645328 | ... | 0.420729 | 0.380208 | 0.455836 | 0.293822 | 0.360284 | 0.401706 | 0.204421 | 0.070771 | 0.268191 | 1.000000 |

34 rows × 34 columns

10.4.2. Conditional Means and Other Statistics#

The best way to describe the relationship between a categorical feature and a continuous one is with a conditional means table: a table with one row for every category with a column for the means of the observations within each category. We created tables exactly like this with .groupby() while performing data aggregation in module 8, so we can employ the same code here. In addition to conditional means, we can include other conditional statistics as well.

For example, to see the relationship between party affiliation and the Biden thermometer ratings, we can construct a table to display these conditional means:

anes.groupby('partyID').agg({'ftbiden':'mean'}).round(2)

| ftbiden | |

|---|---|

| partyID | |

| Democrat | 66.38 |

| Independent | 35.26 |

| Republican | 18.82 |

So Democrats rate Biden 66.38, on average, independents rate Biden 35.26, and Republicans rate Biden 18.82. So there is a clear relationship between party affiliation and Biden thermometer ratings. We can include additional descriptive statistics in this table, such as the median and 25th and 75th percentiles. Because .agg() does not have a shortcut to the quantile function, we first create functions for the 25th and 75th percentiles ourselves and pass these function to .agg():

def q25(x): return x.quantile(0.25)

def q75(x): return x.quantile(0.75)

anes.groupby('partyID').agg({'ftbiden':['mean', 'median', q25, q75]}).round(2)

| ftbiden | ||||

|---|---|---|---|---|

| mean | median | q25 | q75 | |

| partyID | ||||

| Democrat | 66.38 | 70.0 | 50.0 | 88.0 |

| Independent | 35.26 | 33.0 | 6.0 | 53.0 |

| Republican | 18.82 | 8.0 | 1.0 | 31.0 |

We can include more than one continous feature in the table by adding entries to the dictionary we pass to .agg(). Here we can add the Trump thermometer in addition to the Biden thermometer:

anes.groupby('partyID').agg({'ftbiden':['mean', 'median', q25, q75],

'fttrump':['mean', 'median', q25, q75]}).round(2)

| ftbiden | fttrump | |||||||

|---|---|---|---|---|---|---|---|---|

| mean | median | q25 | q75 | mean | median | q25 | q75 | |

| partyID | ||||||||

| Democrat | 66.38 | 70.0 | 50.0 | 88.0 | 11.21 | 2.0 | 0.00 | 7.0 |

| Independent | 35.26 | 33.0 | 6.0 | 53.0 | 40.89 | 37.0 | 2.50 | 73.5 |

| Republican | 18.82 | 8.0 | 1.0 | 31.0 | 82.36 | 94.0 | 74.75 | 100.0 |

We can see simple relationships such as the average age of people who have and who have not attended protests:

anes.groupby('protest').agg({'age':'mean'}).round(2)

| age | |

|---|---|

| protest | |

| False | 52.33 |

| True | 48.03 |

10.4.3. Cross-Tabulations#

A cross-tab describes the relationship between two categorical features. The categories of one feature comprise the rows, and the categories of the other feature comprise the columns, and the cells contain a statistic (often the frequency) calculated from the subset of rows that have the specific values for the two categorical features.

There are a few different ways to create a cross-tab in Python, but the easiest and best way uses the pd.crosstab() function, which takes two arguments: the feature whose categories define the rows, and the feature whose categories define the columns. For example, to analyze the relationship between options on universal basic income and political ideology, we can create a cross-tab with categories of universal_income in the rows and categories of ideology in the columns:

pd.crosstab(anes.universal_income, anes.ideology)

| ideology | Conservative | Liberal | Moderate |

|---|---|---|---|

| universal_income | |||

| Favor a great deal | 55 | 200 | 102 |

| Favor a little | 72 | 129 | 114 |

| Favor a moderate amount | 46 | 154 | 102 |

| Neither favor nor oppose | 135 | 181 | 241 |

| Oppose a great deal | 717 | 55 | 219 |

| Oppose a little | 46 | 55 | 76 |

| Oppose a moderate amount | 82 | 46 | 73 |

Notice that the categories for each feature are listed in alphabetical order. That’s the default behavior when columns are coded as an object data type. But in this case we want to reorder the categories from least to most support for a universal basic income, and from liberal to conservative with moderate in the middle. To reorder categories, we first change each column to a category data type:

anes['universal_income'] = anes['universal_income'].astype('category')

anes['ideology'] = anes['ideology'].astype('category')

Then we can use the .cat.reorder_categories() method to place the categories in the order in which we want to list them in the table:

anes['universal_income'] = anes['universal_income'].cat.reorder_categories(['Oppose a great deal',

'Oppose a moderate amount',

'Oppose a little',

'Neither favor nor oppose',

'Favor a little',

'Favor a moderate amount',

'Favor a great deal'])

anes['ideology'] = anes['ideology'].cat.reorder_categories(['Liberal','Moderate','Conservative'])

pd.crosstab(anes.universal_income, anes.ideology)

| ideology | Liberal | Moderate | Conservative |

|---|---|---|---|

| universal_income | |||

| Oppose a great deal | 55 | 219 | 717 |

| Oppose a moderate amount | 46 | 73 | 82 |

| Oppose a little | 55 | 76 | 46 |

| Neither favor nor oppose | 181 | 241 | 135 |

| Favor a little | 129 | 114 | 72 |

| Favor a moderate amount | 154 | 102 | 46 |

| Favor a great deal | 200 | 102 | 55 |

Raw counts are not always the most informative statistic to place within the cells. We can convert these counts to percents. There are three kinds of percent statistics: row percents, which calculate the quotient of the count to the row total, column percents, which calculate the quotient of the counts to the column total, and cell percents, which calculate the quotient of the count to the overall total. The choice of percent leads to a different interpretation of the table. For example, to display the row percents instead of counts, use the normalize='index' argument. If we want, we can multiply the result by 100 to display percents instead of proportions and we can round to 2 decimal places:

(pd.crosstab(anes.universal_income, anes.ideology, normalize='index')*100).round(2)

| ideology | Liberal | Moderate | Conservative |

|---|---|---|---|

| universal_income | |||

| Oppose a great deal | 5.55 | 22.10 | 72.35 |

| Oppose a moderate amount | 22.89 | 36.32 | 40.80 |

| Oppose a little | 31.07 | 42.94 | 25.99 |

| Neither favor nor oppose | 32.50 | 43.27 | 24.24 |

| Favor a little | 40.95 | 36.19 | 22.86 |

| Favor a moderate amount | 50.99 | 33.77 | 15.23 |

| Favor a great deal | 56.02 | 28.57 | 15.41 |

Because these are row percents, the numbers on each row add to 100. The first row is interpreted as follows: of the people who oppose a universal basic income a greal deal, 72.35% are conservative, 22.1% are moderate, and 5.55% are liberal.

To display column percents, we use the normalize='columns' argument:

(pd.crosstab(anes.universal_income, anes.ideology, normalize='columns')*100).round(2)

| ideology | Liberal | Moderate | Conservative |

|---|---|---|---|

| universal_income | |||

| Oppose a great deal | 6.71 | 23.62 | 62.19 |

| Oppose a moderate amount | 5.61 | 7.87 | 7.11 |

| Oppose a little | 6.71 | 8.20 | 3.99 |

| Neither favor nor oppose | 22.07 | 26.00 | 11.71 |

| Favor a little | 15.73 | 12.30 | 6.24 |

| Favor a moderate amount | 18.78 | 11.00 | 3.99 |

| Favor a great deal | 24.39 | 11.00 | 4.77 |

Here, the numbers in each column add to 100. The first row now has a different interpretation: 6.71% of liberals, 23.62% of moderates, and 62.19% of conservatives oppose a universal basic income a great deal.

To display cell percents, we use the normalize=True argument:

(pd.crosstab(anes.universal_income, anes.ideology, normalize=True)*100).round(2)

| ideology | Liberal | Moderate | Conservative |

|---|---|---|---|

| universal_income | |||

| Oppose a great deal | 1.90 | 7.55 | 24.72 |

| Oppose a moderate amount | 1.59 | 2.52 | 2.83 |

| Oppose a little | 1.90 | 2.62 | 1.59 |

| Neither favor nor oppose | 6.24 | 8.31 | 4.66 |

| Favor a little | 4.45 | 3.93 | 2.48 |

| Favor a moderate amount | 5.31 | 3.52 | 1.59 |

| Favor a great deal | 6.90 | 3.52 | 1.90 |

Here all the cells added together add to 100. The first row is now interpreted as: 1.9% of people are liberal and oppose a universal basic income a greal deal, 7.55% of people are moderate and oppose a universal basic income a greal deal, and 24.72% of people are conservative and oppose a universal basic income a greal deal.

We can populate the cells with statistics other than counts and percents. These cells are calculated from a third column, which we specify with the values parameter. We use the aggfunction parameter to determine the function to apply within each cell; to report the means, we write aggfunction='mean'. We can, for example, create a cross-tab with liveurban (whether the person lives in a city, a rural area, a suburb, or a town) and ideology, and we can populate the cells with average values of partisanship, the difference between Biden’s thermometer rating and Trump’s thermometer rating:

pd.crosstab(anes.liveurban, anes.ideology,

values=anes.partisanship, aggfunc='mean').round(2)

| ideology | Liberal | Moderate | Conservative |

|---|---|---|---|

| liveurban | |||

| City | 56.66 | 21.66 | -45.38 |

| Rural | 46.16 | 6.45 | -64.23 |

| Suburb | 55.42 | 21.39 | -63.63 |

| Town | 52.12 | 19.71 | -63.97 |

People who live in cities and are liberal rate Biden 56.66 points higher than Trump on average, and people who are conservative and live in suburbs rate Trump 63.63 points higher than Biden on average.

10.4.4. Hypothesis Tests#

Most people, when they first start learning statistics, learn about hypothesis testing in their very first course. Learning hypothesis testing so early also means that, for many people, their first course on statistics is their last course on statistics. Hypothesis tests can seem arbitrary and technical. It’s a shame, because these tests can be useful tools for exploring a dataset.

Part of the problem is that hypothesis tests have been generally over-emphasized in many fields that apply statistics for data analysis. Relying too much on a hypothesis test can obscure important aspects of relationships in the data and boil everything down to an oversimplified “significant or not” heuristic.

It is better to think of hypothesis tests as ways to measure certainty about what we see regarding relationships and comparisons in the data. When we look at descriptive statistics, correlations, conditional means, or cross-tabs, we can get a sense of how features in the data relate to one another on average, but we do not get a sense of how much variability exists around these effects. A hypothesis test provides a clear statement about whether enough evidence exists in the data for us to conclude that a relationship is real and not simply a byproduct of randomness.

There are different hypothesis tests for different situations. A \(t\)-test is useful for comparing the means of two columns in a dataframe, or for testing whether the mean of a column varies across groups in the dataframe. An ANOVA with an \(f\)-statistic is useful for comparing the means of several columns at once. A \(\chi^2\) (chi-square) test of association helps us see whether the frequency of categories for one feature depends on the category of another feature. For each test, we calculate a \(p\)-value that helps us draw a conclusion about the whether we have enough evidence to conclude that some effect or difference exists.

Here’s how hypothesis tests work:

Suppose that I grab a soapbox and place it in the middle of Times Square in New York City, and I hop on to it, lift a megaphone to my mouth, and yell

Suppose for a moment that this insane thing to say is actually true. Then think about how cats and dogs behave in the real world. How compatible is the real world behavior of cats and dogs with the assertion that cats are small dogs? For one, cats like to sit on windowsills, while dogs seldom have the patience to sit on a windowsill for very long. In that way, if cats are small dogs, then cats are very unusual dogs. Also, dogs like to fetch and they respond when we call them by name. I’ve never seen a cat that would fetch or react at all to its name. In that way, again, if cats are small dogs, then cats are very peculiar dogs. We are left with one of two conclusions. Either cats are randomly the strangest collection of dogs in the world, or the initial assumption that cats are small dogs was wrong.

Hypothesis testing follows the exact same logic. First we make an assumption about the data. Then we look at the data to see how compatible the data are with that assumption. If the data are not very compatible with the assumption, then we either conclude that our particular sample is a very unlikely draw, or that our initial assumption is false.

The initial assumption is called a null hypothesis. Based on what we see in the data, we will conclude either that the null hypothesis is wrong, or that our sample isn’t big enough or different enough from the population to conclude that the null hypothesis is wrong. We don’t use hypothesis tests to conclude that the null hypothesis is true. For example, suppose that when I observe the behavior of cats and dogs, I see some cats that behave like this:

from IPython.display import IFrame

IFrame(src="https://www.youtube.com/embed/3f_h0rxhD9I", width="560", height="315")

Having observed some adorable cats acting like dogs, I don’t have enough evidence that cats are all that different from dogs. So I don’t reject the assumption that cats are small dogs. But at the same time, I don’t accept that cats are small dogs as truth. I leave the door open to either possibility because the evidence is mixed.

In statistical hypothesis testing, our null hypotheses are statements that there is no relationship between two features, or that there is no difference between quantities we are examing. We start by assumming the null hypothesis is true. Then we calculate a descriptive statistic called a test statistic that describes the relationship or comparison described in the null hypothesis. If the test statistic is large enough, we reject the null hypothesis that there is no relationship or that the features have equal means. If the test statistic is not large enough, or if there isn’t much data, we would fail to reject the null hypothesis, leaving the door open to the null hypothesis being true or false.

10.4.4.1. Understanding What \(p\)-Values Mean and Don’t Mean#

A \(p\)-value is: the probability that a test statistic could be as extreme as it is in the sample under the assumption that the null hypothesis (no relationship, equal means, etc.) is true.

For example, suppose we have a random sample of cats and dogs and for each cat and dog we measure the number of minutes each day the cat or dog spends resting on the windowsill. Across the entire sample, we find that cats spend 60 more minutes a day on the windowsill than dogs on average. Here the difference of 60 is our test statistic. Now suppose that the null hypothesis that cats are small dogs is true. Under this assumption, cats and dogs should spend an equal amount of time on the windowsill on average. In any given random sample, however, we might observe some differences between these averages. We can calculate the probability that the difference could be 60 or larger due to a randomly drawn sample. If this probability is really low then we can conclude that the difference cannot be due to an odd draw for our random sample of cats and dogs, and if the difference isn’t due to sampling variation, then it must be due to a real-life difference between cats and dogs. Then we reject the initial null hypothesis and conclude that cats are not small dogs.

How small should the probability be before we reject the null hypothesis? There are commonly used cutoffs in different fields. The most commonly used standard is \(p < 0.05\), but some fields require more stringent standards like \(p < 0.01\) or smaller. If a \(p\)-value is less than the cutoff we use, then we say that a hypothesis test yields a statistically significant result. Statistical significance means only that we have enough evidence to reject the null hypothesis, nothing more.

A two-tailed test (no pun intended with the cats and dogs example) allows for the possibility that the test statistic can be positive or negative. In the example, if we have no reason to expect cats or dogs to sit on the windowsill for longer, then we can consider both the situation in which cats sit on the windowsill more than dogs, and the situation in which dogs sit on the windowsill more than cats. Our \(p\)-value will be the probability that the test statistic, the average difference, could be greater than or equal to 60 if cats sit longer than dogs, or less than or equal to -60 if dogs sit longer than cats. In formal mathematical notation, let \(t\) be the average difference: the two-tailed \(p\)-value in this example is $\( p = Pr(|t| \geq 60) = Pr(t \leq -60 \text{ or } t \geq 60).\)$

A one-tailed test only considers the possibility of extremity in only one direction. We might be willing to entertain the possibility that cats sit on the windowsill longer than dogs, but not the possibility that dogs sit longer than cats. If this is the case, we calculate the probability that the difference is greater than 60 in a random sample, assuming that there is no difference in the population of cats and dogs, but we do not calculate the probability that the difference is less than -60. The formal definition of the \(p\)-value in this case is $\( p = Pr(t \geq 60).\)\( In practice, a one-tailed test means that we require less evidence in order to reject the null hypothesis. If we already expect cats to sit longer than dogs, then smaller differences will convince us that cats are not small dogs. Two-tailed \)p\(-values will in general be double the size of a one-tailed value, so if the one-tailed \)p\(-value is .03 the two-tailed \)p\(-value will be .06 which means we would reject the null at the \)p<.05$ standard with a one-tailed test, but not with a two-tailed test.

In the examples below we will use Python code to conduct hypothesis tests and to calculate \(p\)-values. In a course on mathematical statistics we would calculate these \(p\)-value by hand, but that is beyond our scope here. We need to know how to run these tests and how to interpret the results.

Interpretation of \(p\)-values is a big challenge because \(p\)-values are very commonly misused and misinterpreted in applied statistics. Although the vast majority of published research articles that use statistics use \(p\)-values as definitive evidence of conclusions, we are currently in the midst of a major pushback against the use of \(p\)-values in applied research. The American Statistics Association, the main professional organization for the academic field of statistics, published “The ASA’s Statement on \(p\)-Values: Context, Process, and Purpose” in 2016, which made a compelling argument against the status quo’s use of \(p\)-values. The field of data science uses \(p\)-values a great deal less than other disciplines because of these issues and because \(p\)-values do not tell us much about the predictive accuracy of models.

For us, \(p\)-values are most useful as ways to conduct EDA. While descriptive statistics show us some basic properties of features and of relationships in the data, hypothesis tests are useful for measuring the strength of evidence that the properties and relationships we see are characteristic of something real, as opposed to generated from randomness in the sample. But it’s important not to rely on \(p\)-values too much. There are a few things to keep in mind about \(p\)-values before using one to draw a conclusion about a comparison or a relationship in the data:

\(p\)-values depend on the size of the sample. The bigger the sample size, in general, the lower the \(p\)-values. The idea here is that more data equates to more evidence, and with more evidence we are more likely to conclude that any difference in the data is real and not due to an idiosyncratic sample. The amount of data used to conduct the test (after accounting for the number of test statistics we need to estimate) is called the degrees of freedom for the test, and \(p\)-values always decline as the degrees of freedom includes. That means that if we have a lot of data, then all of our test results will be significant! In that case, \(p\)-values don’t tell us very much at all.

\(p\)-values don’t tell us about the size of an effect or about how much of a difference there is between groups. It’s possible to have a very small \(p\)-value attached to a very small and possibly negligible effect. Don’t confuse low \(p\)-values with the “strength” of a finding.

\(p\)-values don’t demonstrate that one feature causes another. There are a whole lot of other factors that we need to take into consideration to make a statement about causality.

If we are doing multiple hypothesis tests, each with a \(p\)-value, then the probability of a false positive result goes up with the number of tests. Suppose that we do 20 tests, for example, and in each test suppose that the null hypothesis is true (so that we should never reject the null hypothesis). \(p=.05\) means that there is a 1 in 20 chance of getting a significant result and rejecting the null hypothesis when we shouldn’t. So, because we conduct 20 tests, we should expect to reject a null hypothesis for one of the tests when we should not do so. XKCD comics illustrates this point with jellybeans:

10.4.4.2. Comparison of Means Tests#

One set of hypothesis tests consider whether the mean of a column differs from a specified value, differs depending on groups in the data, or differs from the mean of another column in the data. Comparing a mean to a pre-specified value is called a one-sample \(t\)-test, comparing the mean across groups is called an independent samples \(t\)-test, and comparing the means of different columns is a paired \(t\)-test. The functions for these hypothesis tests exist in the stats module of the scipy package. To run a one-sample \(t\)-test, use stats.ttest_1samp(), to run an independent sample \(t\)-test, use stats.ttest_ind(), and to run a paired \(t\)-test, use stats.ttest_rel().

The mean thermometer rating for Joe Biden is

anes.ftbiden.mean()

42.15189466923571

We might be interested in whether there exists sufficient evidence in the data to conclude that the mean is statistically significantly different from 40. To run a one-sample \(t\)-test, we type

mytest = stats.ttest_1samp(anes['ftbiden'].dropna(), 40)

mytest

Ttest_1sampResult(statistic=3.5913467876717964, pvalue=0.0003340593853690189)

Note that in the above code we have to use the .dropna() method to calculate conduct the test only on the non-missing observations of ftbiden. Let’s denote the mean Biden thermometer rating in the population as \(\mu\). The null hypothesis in this case is

$\(H_0: \mu = 40\)\(

The \)p\(-value is the probability that a sample of 3,164 observations could have a mean at least 2.152 units away from (greater than or less than, since this is a two-tailed test) 40 if *we assume that the true mean in the population is 40*. Here the \)p$-value is .0003, which is quite a bit smaller than the .05 standard we use to reject the null hypothesis. So we say that the mean thermometer rating for Joe Biden is statistically significantly different from 40.

The mean Biden thermometer rating for men and the mean Biden thermometer rating for women are

anes.groupby('sex').agg({'ftbiden':'mean'})

| ftbiden | |

|---|---|

| sex | |

| Female | 44.882759 |

| Male | 39.284398 |

We see a difference between these two conditional means: specifically, women have a rating for Biden that is about 5.2 points higher on average than the ratiings from men. We want to test whether we have enough evidence in the data to reject the idea that these means are equal in the population. Let the mean Biden thermomemter for men is \(\mu_m\) and let the mean Biden thermometer for women be \(\mu_w\). We will run an independent samples \(t\)-test in which our null hypothesis is

$\( H_0: \mu_m = \mu_w \)$

To run this test, we first create two new Python variables, one containing the values of ftbiden for men, and one containing the values of ftbiden for women, removing the missing values from both series:

ftbiden_men = anes.query("sex=='Male'").ftbiden.dropna()

ftbiden_women = anes.query("sex=='Female'").ftbiden.dropna()

To run the independent samples \(t\)-test, we pass the two series to stats.ttest_ind() with the equal_var=False option because we cannot assume that the two series have the same variance. The code is:

stats.ttest_ind(ftbiden_men, ftbiden_women, equal_var=False)

Ttest_indResult(statistic=-4.684509884485571, pvalue=2.927022297618164e-06)

Here the \(p\)-value is about .0000002, which is the probability that under the assumption that men and women approve of Biden equally, on average, that we could draw a sample with a difference between these two means of 4.68 or higher. Because this probability is lower than .05, we can reject the null hypothesis and conclude that there is a statisitically significant difference between men and women in terms of how highly they rate Joe Biden.

Next, suppose that we want to compare the average rating of Trump to the average rating of Biden. These two means, and their difference, are:

{'trump':anes.fttrump.mean(),

'biden':anes.ftbiden.mean(),

'difference':anes.fttrump.mean() - anes.ftbiden.mean()}

{'trump': 43.87000958160332,

'biden': 42.15189466923571,

'difference': 1.7181149123676107}

We can use a paired \(t\)-test to determine whether there is enough evidence in the data to reject the idea that the two candidates have the same mean in the population. Let the mean rating of Trump be \(\mu_t\) and let the mean rating of Biden be \(\mu_b\). The null hypothesis in this case is $\( H_0: \mu_t = \mu_b \)$ To run this test, we first create a subset of the dataframe that contains only the columns we want to compare, and we remove all rows that are missing on any of these columns:

anes_ttest = anes[['fttrump', 'ftbiden']].dropna()

stats.ttest_rel(anes_ttest['fttrump'], anes_ttest['ftbiden'])

Ttest_relResult(statistic=1.6327284676310017, pvalue=0.10262803725374475)

Then we pass this columns in this subset to the stats.ttest_rel() function:

stats.ttest_rel(anes_ttest['fttrump'], anes_ttest['ftbiden'])

Ttest_relResult(statistic=1.6327284676310017, pvalue=0.10262803725374475)

The \(p\)-value, about 0.1, is the probability that a sample could have produced a difference in means of 1.72 or greater in either direction if the truth is that the columns have the same mean in the population. Because this \(p\)-value is greater than .05, we fail to reject the null hypothesis that the two candidates have the same average thermometer rating.

Please note, failing to reject the null hypothesis is NOT the same thing as accepting the null hypothesis. We cannot conclude from this test result that Trump and Biden have the same average rating. We just don’t have enough evidence to rule out that possibility. The thermometers might be the same or different.

10.4.4.3. Tests of Multiple Comparisons#

In the previous section, we compared the mean of one column in the dataframe as it differs across two distinct groups in the data. We can also compare the mean as it differs across more than two groups. For example, we can look at the average age of Democrats, independents, and Republicans:

anes.groupby('partyID').agg({'age':'mean'})

| age | |

|---|---|

| partyID | |

| Democrat | 50.734894 |

| Independent | 48.705104 |

| Republican | 56.350877 |

If we wanted to compare Democrats to Republicans, Democrats to independents, or Republicans to independents, we can use stats.ttest_ind() to make these comparisons. But suppose we wanted to compare all three at once. Let \(\mu_d\) be the average age of Democrats, \(\mu_i\) be the average age of independents, and \(\mu_r\) be the average age of Republicans. The null hypothesis we want to assess is

$\( H_0: \mu_d = \mu_i = \mu_r \)\(

Another way to write the null hypothesis is as three hypotheses that we assess simultaneously: